GPUs

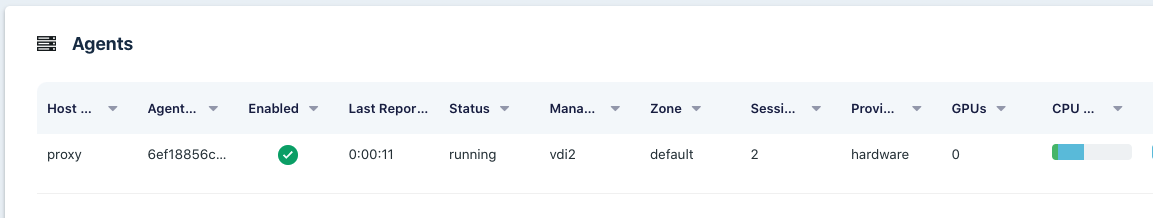

Agents table shows 0 GPUs

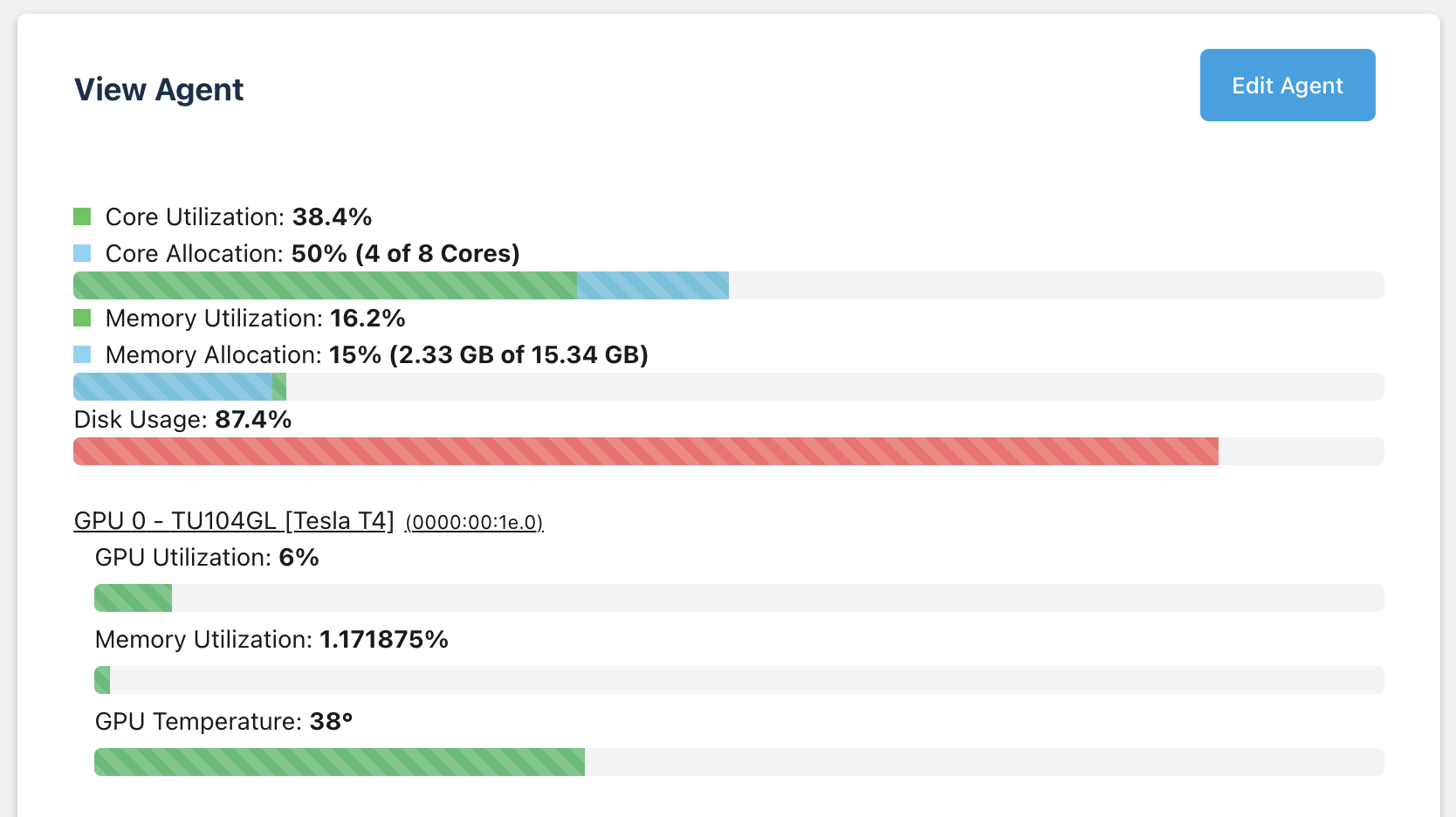

The first image below is the Agents list. The GPUs column should show the number of GPUs available on that agent. The second image is of the top of the View Agent panel. If the GPU(s) was detected, this view will show details about the GPU. Finally, the third image below is the GPU Info details shown on the Agent View Panel.

Kasm uses multiple methods to gather information about GPUs. If the GPU Info json contains some, but not all information about your GPUs and the agent is not reporting any GPUs, ensure that the Nvidia Docker toolkit is installed and you restarted the docker daemon. Run the command

sudo docker system info | grep Runtimesand check thatnvidiais listed.To enable GPU support on an agent, the Nvidia GPU acceleration driver must be installed on the agent. Reference the GPU installation guide for more information.

Agents List

Agent Details

Agent GPU Info

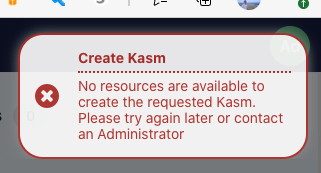

No resources are available : When users attempt to provision a workspace they get the following error message.

No Resources Error

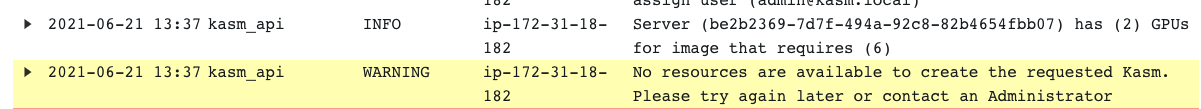

There are a number of reasons for this error message to occur, generally, this error message means that the workspace had requirements that could not be fulfilled by any of the available agents. The requirements include: CPUs, RAM, GPUs, docker networks, and zone restrictions. Check the Logging view under the Admin panel to search for the cause. The example below shows that the image was set for 6 GPUs but no agent could be contacted with at least 6 GPUs.

Logs indicating source of issue

nvidia-smi errors : The nvidia-smi tool should automatically be available inside the container. Before troubleshooting anything with Workspaces, ensure nvidia-smi works on the host directly. Next, ensure that you can manually run a container on the host with the nvidia runtime set and successfully run the nvidia-smi command from within the container. If you are able to run nvidia-smi from the host directly and when manually running a container, then open a support issue with Kasm.

Session With Black Screen : When running a session with an NVIDIA GPU enabled, the session may only render a black screen. Viewing the container logs of the sessions may show the following error

libEGL warning: egl: failed to create dri2 screen. Add the following entry to the Docker Run Config of the Workspace.{ "environment": { "NVIDIA_DRIVER_CAPABILITIES": "all" } }