Troubleshooting

Connection to Session Hangs - Requesting Kasm

Note

When troubleshooting this issue, always test by creating new sessions after making changes. Resuming a session may not have the changes applied.

Session hangs at requesting Kasm

Client-side issues

Tip

If the Kasm Workspaces deployment is internet accessible, consider testing access via a browser using the Kasm Live Demo to help rule out client-side issues. You can sign up for the free demo at https://kasmweb.com

Disable Browser Extensions

Some browser extensions have been know to cause conflicts when making connections to Kasm Sessions. Disable any browser extensions/add-ons that may be enabled. Avoid using “incognito” or “private-browsing” modes while troubleshooting.

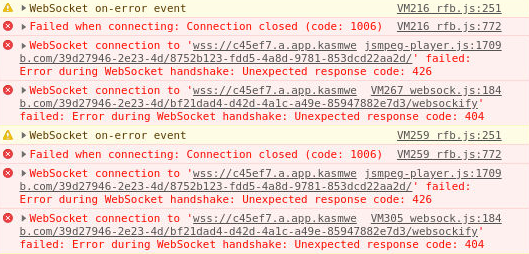

Corporate Proxies - WebSockets

Some corporate proxies/firewalls and other security appliances may not support proxying WebSockets, which is needed to establish a connection to Kasm sessions. You can verify your environment supports WebSockets by visiting: https://www.websocket.org/echo.html .

You may see the UI cycle between “Requesting Kasm…” and “Connecting…” repeatedly. The following errors will repeat in the Console tab of the Develop Tools for the browser.

Session hangs at requesting Kasm

Server-side issues

Compatible Docker Version

Ensure the system is running compatible versions of

dockeranddocker compose. See System Requirements for more details.Reverse Proxy Issues

If running Kasm behind a reverse proxy such as NGINX, please consult the Reverse Proxy Guide The reverse proxy used, must support WebSockets, and the Zone configuration must also be updated accordingly.

Name Resolution and General Connectivity

If accessing Kasm Workspaces via DNS name, ensure the Kasm Workspaces services can properly resolve the same address. The Kasm services must also be able to connect to user-facing addresses. During installation, docker will create several local sub interfaces and Kasm services are assigned addresses on those bridged networks. Ensure firewalls or security groups are not blocking access.

Conduct the following tests from the Kasm Workspaces server to ensure name resolution and general connectivity are working properly. If these items fail, correct the dns/networking/firewall issues.

# Test using the URL used to connect to the Kasm UI sudo docker exec -it kasm_proxy wget https://<your.kasm.server>:443 # Test using the IP of the Kasm Workspaces server if your deployment is using a reverse proxy. If Kasm Workspaces was installed using a non-standard port. Specify that port here sudo docker exec -it kasm_proxy wget https://<your.kasm.server.ip>:443

If there is a NAT / DNS issue that will prevent the Kasm Workspaces services from ever contacting the user-facing address, then the address used by the services can be updated with the Kasm Workspaces server IP address.

This can be done by navigating to Kasm Admin UI page, Zones, editing the Default zone with the pencil icon and setting the Upstream Auth Address to the IP of the Kasm workspaces server

UFW on Ubuntu and Debian Systems

Despite Docker adding rules to iptables allowing external connections the the ‘kasm_proxy’ container from external IPs, UFW’s default rules will block connections from ‘kasm_default_network’ to the ‘kasm_proxy’ container.

Ensure that there is a firewall rule added to allow these connections.

# Test to see if UFW is installed and enabled sudo ufw status # If UFW is active then allow https connections sudo ufw allow https

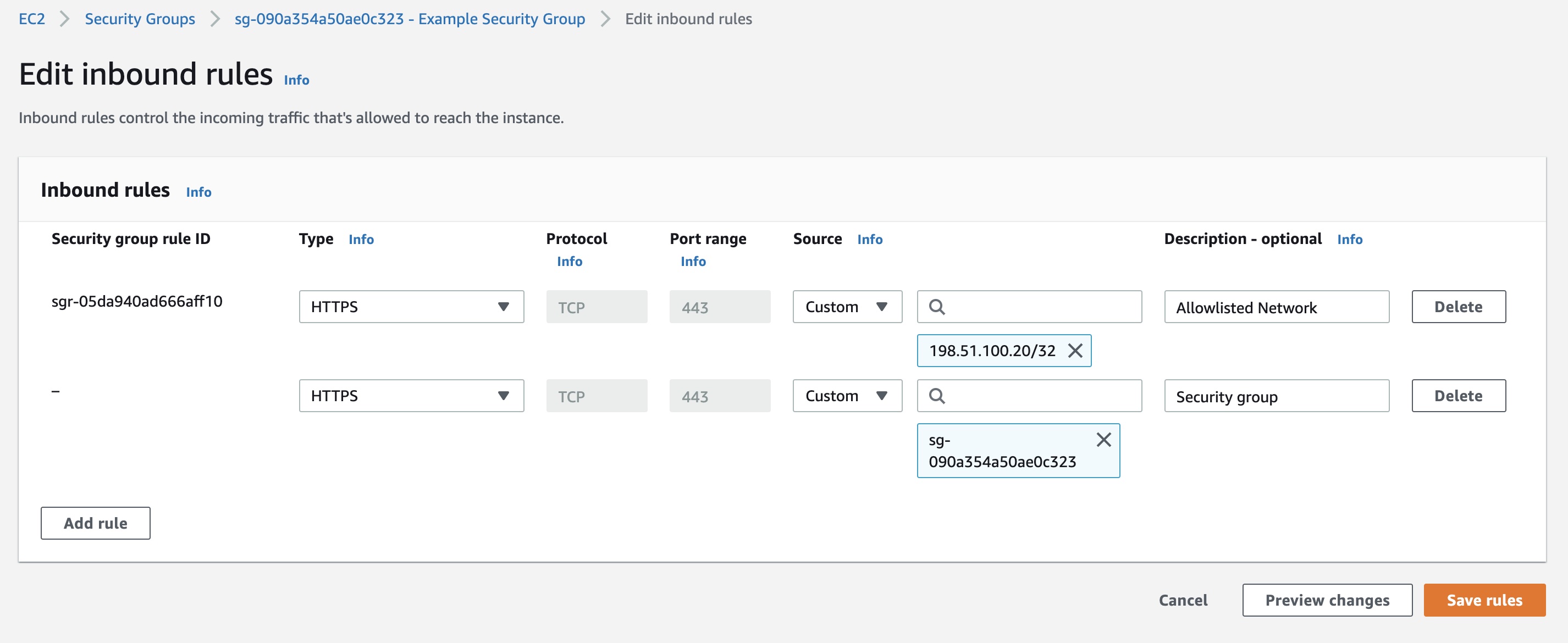

Cloud Security Groups

If the security group rule in the AWS / Azure / OCI console that allows https into the Web App restricts connections by IP address (the source IP is not “0.0.0.0/0”), then an additional rule must be added to allow the server to connect to itself.

This is done by creating an additional rule to the security group allowing https connections from the same security group.

Security Group Example

No Resources Available

This error has several possible causes, the most common ones are covered below. Before proceeding with troubleshooting this video provides and overview on resource allocation within Kasm Workspaces:

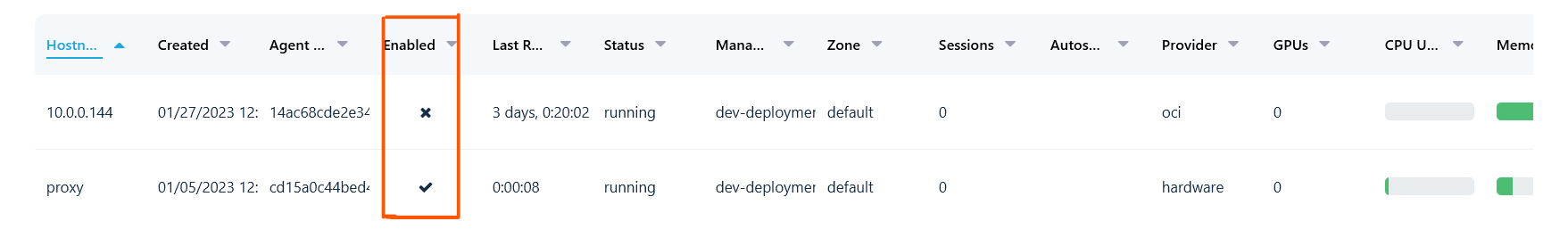

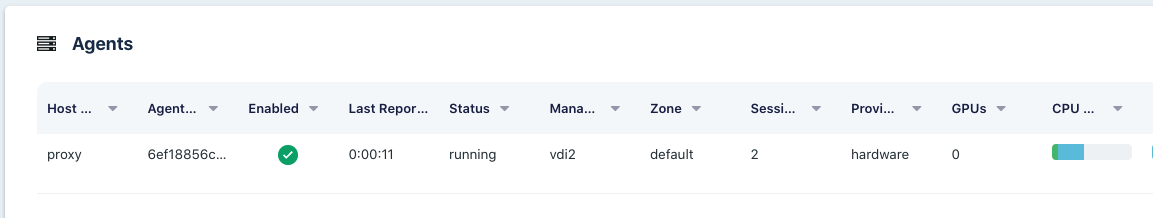

Checking That the Agents Are Online

It’s possible the admin has created enough docker agents, but not all of them are enabled. In the admin UI select Compute and then select Docker Agents this will display a table of all agents registered with the system. There is a column in the table that displays if the agent is enabled or not. If agents are listed as disabled that should be enabled that may be the reason for the No Resources Available error. Enable the agents and try again after a few minutes. The table below shows both an enabled and a disabled agent.

NOTE: Agents that are disabled will not download new images until re-enabled. After enabling an agent that agent might require some time to download the latest images.

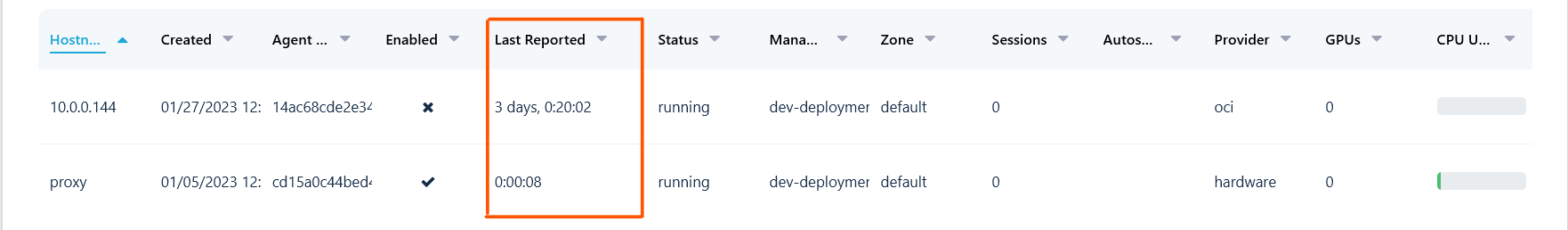

The Docker Agent Table has a Column Displaying the Enabled Status

If all the agents that are supposed to be enabled are enabled, then it may be a communication problem. The table on the Docker Agents page in the admin UI also displays a column for the last time the agent reported in to Kasm Workspaces. The heartbeat interval of an agent is configurable in the agent config (in milliseconds) /opt/kasm/current/config/app/agent.app.config.yaml. The default is 30 seconds. In the screenshot below you can see one agent that has not reported for more than 3 days.

The Docker Agent Table has a Column Displaying the Last Reported Time

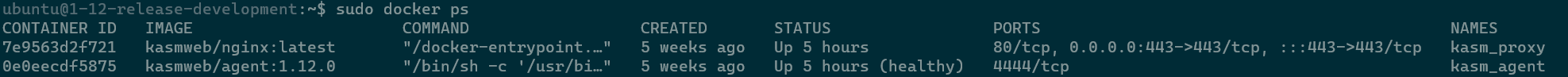

The first thing to verify if you have an agent that is not reporting in to Kasm Workspaces is to verify that the agent is powered on and that the Kasm agent container is running on the agent.

After verifying that the agent is powered on, ssh into the agent and from the command line run sudo docker ps to verify the Kasm agent docker container is running.

Here you can see that both the kasm_agent and kasm_proxy containers are up and healthy. If either container is not running try running sudo bash /opt/kasm/bin/stop and sudo bash /opt/kasm/bin/start watch for the containers to start and have a healthy status using the same sudo docker ps command as before then try starting the Workspace again.

Checking the Status of the Kasm Agent and Proxy Containers

If the agent is powered on and the kasm_agent and kasm_proxy containers are running then it is likely a communication problem between the agent and the manager/webapp server.

A quick diagnostic that can be run is a simple curl between the agent and the manager. SSH into the agent and exec into the agent container. sudo docker exec -it kasm_agent bash, then run curl -v -k https://<manager_ip>/manager_api/__healthcheck filling in the proper manager IP into the URL. If your output looks like below then you have working basic connectivity between the agent and manager, otherwise it will be necessary to troubleshoot what is causing the disruption in communication.

root@04721c171946:/src/provision_agent# curl -v -k https://<manager_ip>/manager_api/__healthcheck

* Trying <manager_ip>:443...

* TCP_NODELAY set

* Connected to <manager_ip> (<manager_ip>) port 443 (#0)

* ALPN, offering h2

* ALPN, offering http/1.1

* successfully set certificate verify locations:

* CAfile: /etc/ssl/certs/ca-certificates.crt

CApath: /etc/ssl/certs

* TLSv1.3 (OUT), TLS handshake, Client hello (1):

* TLSv1.3 (IN), TLS handshake, Server hello (2):

* TLSv1.3 (IN), TLS handshake, Encrypted Extensions (8):

* TLSv1.3 (IN), TLS handshake, Certificate (11):

* TLSv1.3 (IN), TLS handshake, CERT verify (15):

* TLSv1.3 (IN), TLS handshake, Finished (20):

* TLSv1.3 (OUT), TLS change cipher, Change cipher spec (1):

* TLSv1.3 (OUT), TLS handshake, Finished (20):

* SSL connection using TLSv1.3 / TLS_AES_256_GCM_SHA384

* ALPN, server accepted to use http/1.1

* Server certificate:

* subject: C=US; ST=VA; L=None; O=None; OU=DoFu; CN=dev-deployment; emailAddress=none@none.none

* start date: Jan 5 17:44:03 2023 GMT

* expire date: Jan 4 17:44:03 2028 GMT

* issuer: C=US; ST=VA; L=None; O=None; OU=DoFu; CN=dev-deployment; emailAddress=none@none.none

* SSL certificate verify result: self signed certificate (18), continuing anyway.

> GET /manager_api/__healthcheck HTTP/1.1

> Host: <manager_ip>

> User-Agent: curl/7.68.0

> Accept: */*

>

* TLSv1.3 (IN), TLS handshake, Newsession Ticket (4):

* TLSv1.3 (IN), TLS handshake, Newsession Ticket (4):

* old SSL session ID is stale, removing

* Mark bundle as not supporting multiuse

< HTTP/1.1 200 OK

< Server: nginx

< Date: Tue, 31 Jan 2023 18:03:34 GMT

< Content-Type: application/json; charset=UTF-8

< Content-Length: 12

< Connection: keep-alive

< Etag: "9463df0cbfa20eb19a5b7d1fa0b99cf9a0cbf56f"

< Strict-Transport-Security: max-age=63072000

<

* Connection #0 to host <manager_ip> left intact

{"ok": true}

Here are a few items to verify when experiencing communication issues.

Misconfigured cloud provider firewall.

Misconfigured firewall on the agent.

Misconfigured firewall on the webapp servers.

Misconfigured DNS.

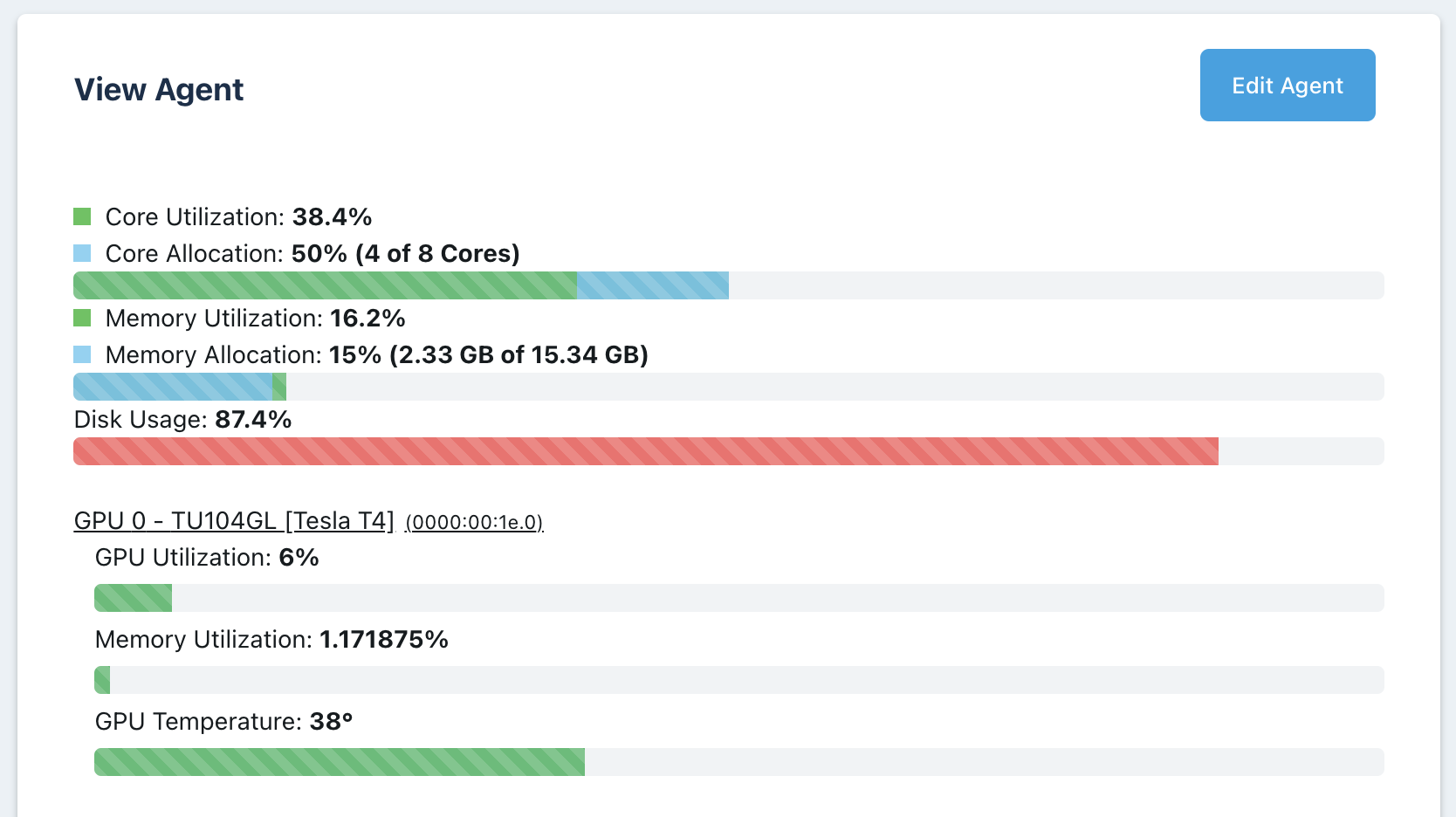

Not enough Free Resources

If all the agents are enabled, and you are still seeing the No Resources Available error then it might be that none of the available agents have enough free resources to provision the Workspace container. CPUs, memory and GPUs are specified when the workspace is defined, there must be a Kasm agent that has at least that number of CPUs, memory, and GPUs available in order to start the Workspace. If there is not an agent that has the required resources free there are three options for increasing available resources.

Override the agents to simulate additional resources.

There are two sets of resources an agent has, the actual physical resources assigned to the agent and the resources the administrator specifies Kasm Workspaces should consider the agent has, this is called overriding. The documentation link has more information on what overriding is and how to use it.

Even with overridding resources docker cannot start a container that requires more resources than the agent/host has physically available.

Add additional resources to the agent VMs.

If your agents are virtual machines and not bare metal hardware then it is possible to add additional resources to the individual VMs that the agents are installed upon. After modifying the VM assigned resources restart the VM. When the Kasm agent starts it should automatically pick up the increased resources that are available. If you have any overrides configured on that agent make sure to update those overrides to reflect the new available resources for the agent.

Add additional agents

If you have already added resource overrides to your agents and increased the number of physical resources available to the agent VMs then the next best option is to create additional VMs and install/configure these VMs as additional Kasm agents. Reference the multi server installation docs for details.

Kasm Workspaces has the ability to dynamically create additional agents to satisfy demand. See the Autoscale documentation for more details.

It is also possible to adjust the resources that a Workspace requires, however lowering the resources assigned to a Workspace container will have performance implications for users.

All of these options give Kasm administrators a great deal of flexibility in how they architect and design their Kasm Workspaces solution to ensure that there are enough resources available for their users. It’s important to take into account not just the nominal usage case, but also the maximum expected usage scenario in both users and sessions to ensure adequate resources are available. Kasm provides a deployment and sizing guide that can help with deciding on the best Kasm architecture.

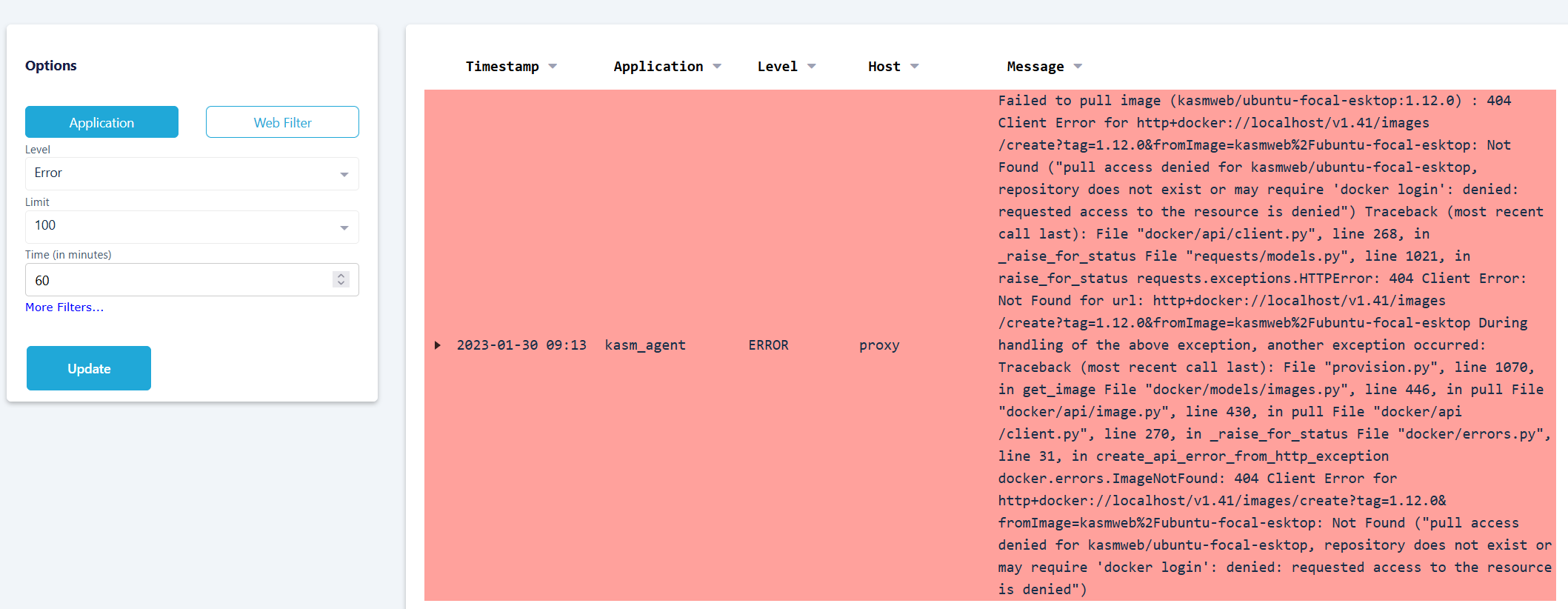

The Agent Doesn’t Have the Image Requested

After an image is added to Kasm Workspaces each agent needs to download that image. The time it takes to download the image will depend on the available bandwidth and the size of the image. It is also possible that there is an error preventing the agents from download the image, like a typographical error in the name of the image. To determine whether that is the case review the logs for errors similar to this:

Example Error Message for Agent Unable to Pull Image

In this case by looking at the error message we can see that kasmweb/ubuntu-focal-esktop is misspelled and is missing the d in desktop. The agent will be unable to pull this image since it doesn’t exist and that results in the No Resources Available error.

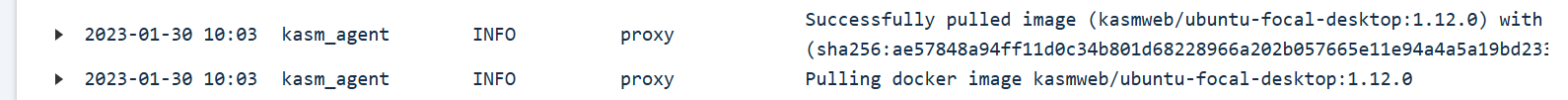

In the case that the Workspace definition is valid you should see two messages from the Kasm agent, one for pulling the image, and one for when the image has successfully pulled. if the Successfully pulled image message is not present and there is no error log present then likely the reason for the No Resources Available error is that the agent has not yet finished pulling the image, wait for the agent to successfully pull the image to resolve the No Resources Available error.

Successful Logs Messages for Kasm Agent Image Pull

An additional way to verify if the image is present on the Kasm agents is to ssh to each agent and run sudo docker image ls | grep ubuntu substituting ubuntu for the image name that you are looking for.

Another problem that can happen with a Workspace is if the image specified in the Workspace configuration is of a different architecture than the agent it is trying to run on.

GPU Passthrough Support

Agents table shows 0 GPUs

The first image below is the Agents list. The GPUs column should show the number of GPUs available on that agent. The second image is the top of the View Agent panel. If the GPU(s) was detected, it this view will show details about the GPU. Finally, the third image below is the GPU Info details shown on the Agent View Panel.

Kasm uses multiple methods to gather information about GPUs. If the GPU Info json contains some, but not all information about your GPUs and the agent is not reporting any GPUs, ensure that the Nvidia Docker toolkit is installed and you restarted the docker daemon. Run the command

sudo docker system info | grep Runtimesand check thatnvidiais listed.To enable GPU support on an agent, the Nvidia GPU acceleration driver must be installed on the agent. Reference the GPU installation guide for more information.

Agents List

Agent Details

Agent GPU Info

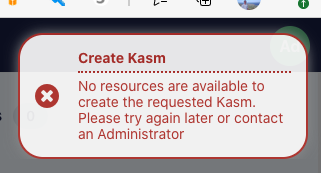

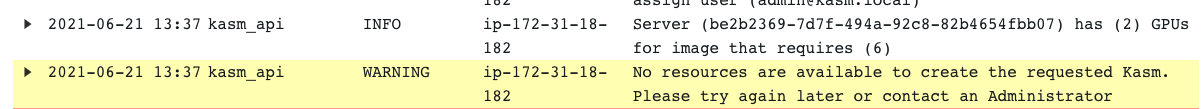

No resources are available : When users attempt to provision a workspace they get the following error message.

No Resources Error

There are a number of reasons for this error message to occur, generally, this error message means that the workspace had requirements that could not be fullfilled by any of the available agents. The requirements include: CPUs, RAM, GPUs, docker networks, and zone restrictions. Check the Logging view under the Admin panel to search for the cause. The example below shows that the image was set for 6 GPUs but no agent could be contacted with at least 6 GPUs.

Logs indicating source of issue

nvidia-smi errors : The nvidia-smi tool should automatically be available inside the container. Before troubleshooting anything with Workspaces, ensure nvidia-smi works on the host directly. Next, ensure that you can manually run a container on the host with the nvidia runtime set and successfully run the nvidia-smi command from within the container. If you are able to run nvidia-smi from the host directly and when manually running a container, then open a support issue with Kasm.